Everyone uses ‘science’ to support their claims – the research shows, the evidence suggests, scientists agree… we’ve all read it, all heard it, and let’s be honest, we’ve all said it. The evidence bar, however low it might be set, demands scientific backing as a minimal sufficiency criteria for any kind of credibility. Science is the new playing field upon which we make daily life decisions. At the supermarket? – there’s a science for that. Trying to decide which outrageously expensive cream to rub on your skin? – check the scientific research. Buying a car – choose the one with the lowest emissions, as tested by science. When claims conflict, the meta-game of whose science is better, bigger, faster, stronger ensues.

With everyone selling science, the burden falls upon consumers of information (and that includes pretty much everyone) to judge, critique and reason.

Learning without thought is labor lost…

But judging, critiquing and making reasoned decisions about scientific evidence isn’t easy. In an excellent (open access) chapter for the book Psychology of Learning and Motivation, Shah et al (2017) highlight the challenge, review identified issues and offer some thoughts on overcoming the problem.

What Makes Everyday Scientific Reasoning So Challenging?

People are regular consumers of science claims presented in newspapers, advertisements, scientific articles and word of mouth. Informed citizens are expected to use this information to make decisions about health, behavior, and public policy. However, media reports of research often overstate the implications of scientific evidence, overlook methodological or statistical flaws or even present pseudoscience. People need to learn to distinguish between high quality science, low quality science and pseudoscience. Unfortunately, students and sometimes even trained scientists make errors when reasoning about evidence.

Shah et all offer a number of factors that contribute to erroneous reasoning about science, here are just three of them:

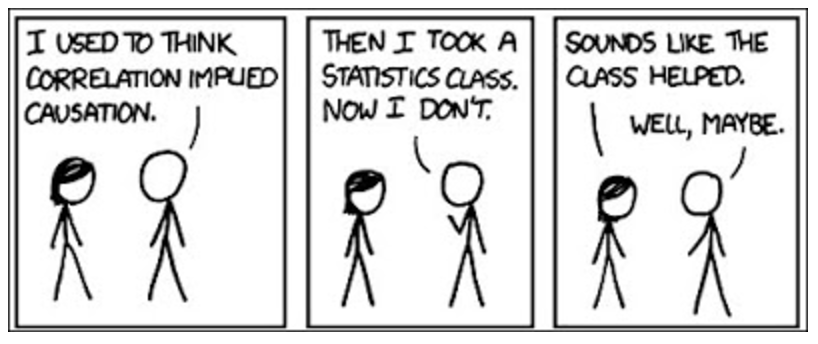

1. Causality Bias

A common theory-evidence coordination error and problem of internal validity is drawing strong causal conclusions based on correlational data or judging that those who do so are correct. If two variables are correlated there are several possible reasons: variable a causes variable b; variable b causes variable a; variable c causes both a and b; there is an interaction such that a causes b and in turn b causes a or the correlation is spurious or coincidental.

Scientists recognize that the inference of causation based on covariation plus a mechanistic model is not solid proof and that covariation data leave open alternative explanations. Unfortunately, for many everyday contexts individuals can easily identify potential mechanisms for many relationships and thus may readily accept causal models of correlational data even though the presumed mechanisms have little validity or are merely assumed.

Henry David Thoreau suggested that castles built in the air could be saved by putting foundations under them, the equivalent in health care seems to be building a sciency sounding mechanism under dodgy correlational findings.

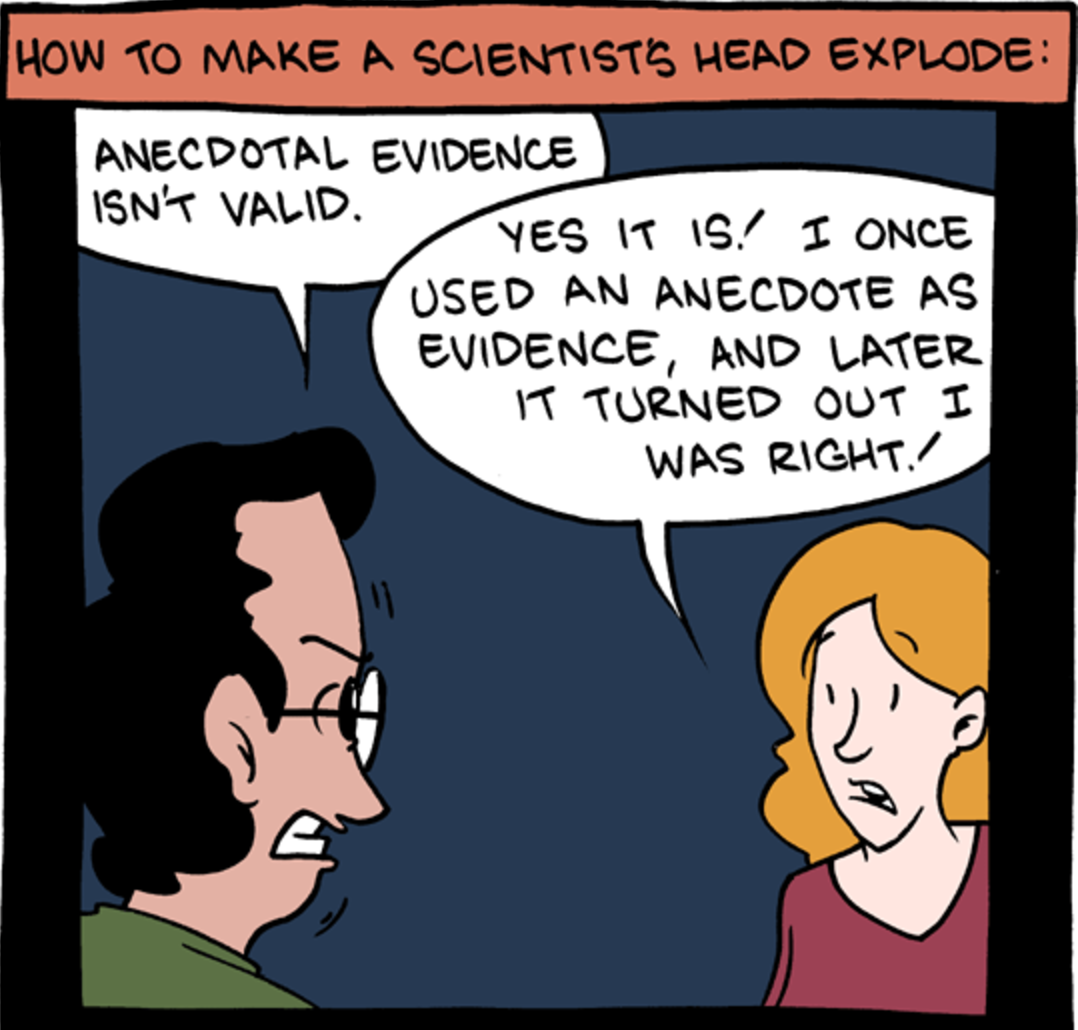

2. Presence of anecdotes

In general, people tend to pay more attention to anecdotal information compared to statistical information in the context of decision making… Across two studies, college students read and evaluated a set of fictional scientific news articles. These articles provided summaries of research studies in psychology. However, all of the articles made unwarranted interpretations of the evidence, such as making strong conclusions from weak evidence or implying causality from correlational results… Even after controlling for important variables, such as level of college training, knowledge of scientific concepts and prior beliefs, the presence of anecdotal stories significantly decreased students’ ability to provide scientific evaluation of the studies.

Story telling and narrative are powerful educational tools, but they are clearly a double edged sword.

3. Microlevel evidence

When neuroscience [microlevel evidence] is mentioned in an explanation or study description, people tend to rate the explanation/description as being higher in quality. Reports that include neuroimaging evidence often include brain pictures as well, perhaps showing results of fMRI analyses, which could also have an effect on scientific evaluations.

The catch, however, is that researchers find that irrelevant neuroscience information, which has no bearing on the study being described, also improves ratings of explanations and study quality. In this way, neuroscience information can have a “seductive” appeal, such that people will judge studies containing neuroscience information more favorably regardless of whether they actually understand it… people may be at higher risk for being “seduced” by reductionist [microlevel] details, regardless of their relevance or logical relation to the study being reported. (emphasis added)

The image above is from the most recent issue of an Australian Physiotherapy magazine. It’s not a scientific paper, but then the image of the hand pointing at a brain scan has absolutely nothing to do with the article, which discusses ‘advanced scope physiotherapy roles across Australia’. The author/editor/publisher clearly thought though, that a stock image of a brain would lend credibility to whatever it is they were writing.

…Thought without learning is perilous

-Confucius

Go and read the whole chapter, it’s not particularly long and it will help sharpen your thinking – I can guarantee it and there is science to back up my claim…

Shah et al offer some thoughts on overcoming the problem. A sharper focus during education is vital (and as life long learners, it’s never too late to start):

Thus science instruction as early as k-12 should not only focus on scientific inquiry skills, but also critical thinking skills in the context of science evidence evaluation. Students can be taught to challenge evidence without losing trust in science as an enterprise. They can be taught both to trust scientific authority in general and to take novel scientific findings with a grain of salt. (emphasis added)

This is such a critical point, skepticism and critique isn’t anti-science – they just are science. As always, Carl Sagan said it best:

Go and science better.

-Tim Cocks

Great job Tim

It must be true, I read it in the papers…..😱